Event-Sourced Conversational Agent: Deterministic Core, Generative Interface

Suppose your business case requires maintaining a clear audit trail of all actions taken, complete control of state and validation of all commands, and saving agents do disk and later resuming.

TL/DR

Suppose your business case requires maintaining a clear audit trail of all actions taken, complete control of state and validation of all commands, and saving agents do disk and later resuming. Prompt engineering and a chat history alone won't work.

Event-sourced agents

We can draw inspiration from domain driven design and event sourced applications to model an agent as a state machine that we have complete control with, leaving to LLM the role of natural language understanding and generation to translate user input to commands and agent state to agent messages.

Motivation

Suppose your business case requires:

maintaining a clear audit trail of all actions taken.

supporting complex state transitions and business logic.

mapping the state to calls to other systems (e.g., fill-in a order form, call the checkout API, etc).

making the agent’s state resumable after crashes or restarts.

Some examples of such applications include:

Shopping cart: add/remove items, checkout

Todo list: add/remove tasks, mark complete

Banking: deposit/withdraw, check balance

The agent is not trying to do searches or solve any problem; it has almost no place in tool use. It is just a natural language interface to a state machine.

Agent main loop

In Python, we can implement the main loop as:

def main_loop(state: Checkout, history: History):

while True:

user_message = input(”User: “)

command = parse_command_llm(user_message) # Implement LLM call to parse command

events = state.execute(command)

for event in events:

state.apply(event)

persist_event(event) # Implement this to save event

agent_response = generate_response_llm(state, history) # Implement LLM call to generate response

history.messages.append(Message(role=User(), text=user_message))

history.messages.append(Message(role=Agent(), text=agent_response))

send_response(agent_response) # Implement this to send response back to user

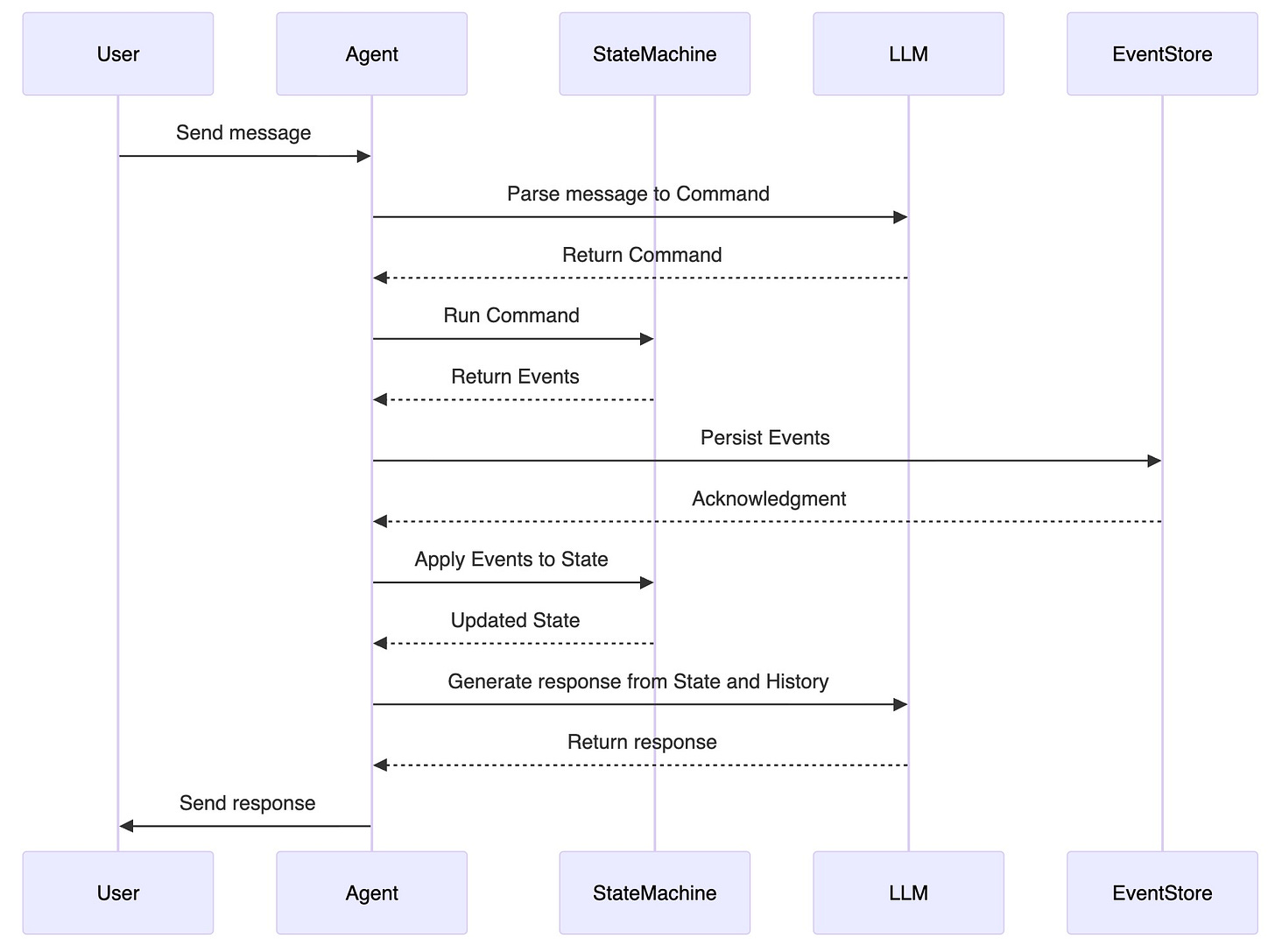

In other words, the agent:

Receives a message from the user.

Uses an LLM to parse the message into a command.

Executes the command against the state machine, producing events.

Persists the events to an event store.

Applies the events to the current state.

Uses an LLM to generate a response based on the updated state and history.

The interaction model

What we assume here is a classical depiction of event sourcing, writting with types to make it clearer as a sequence diagram:

Generative calls

The generative part works to translate user messages to the correct commands, which are realized as data constrained to the schemas defined by the Pydantic models and fully serializable to JSON.

import openai

def parse_command_llm(user_message: str) -> Command:

# Implement LLM call to parse the user message into a Command

response = openai.responses.parse(...)

...

A second generative call is used to generate a response based on the current state and history, to be used after applying the events to the state.

def generate_response_llm(state: Checkout, history: History) -> str:

# Implement LLM call to generate a response based on the current state and history

response = openai.responses.generate(...)

...

All of which is supported by the rather tedious definitions of the commands, events, and state machine logic.

Boilerplate for commands, events, and state machine

Commands

class CommandType(str, Enum):

ADD_ITEM = “add_item”

REMOVE_ITEM = “remove_item”

SET_DELIVERY_ADDRESS = “set_delivery_address”

SET_PAYMENT_METHOD = “set_payment_method”

CHECKOUT = “checkout”

class AddItem(BaseModel):

type: CommandType = CommandType.ADD_ITEM

item: Item

class RemoveItem(BaseModel):

type: CommandType = CommandType.REMOVE_ITEM

item_id: str

class SetDeliveryAddress(BaseModel):

type: CommandType = CommandType.SET_DELIVERY_ADDRESS

address: Address

class SetPaymentMethod(BaseModel):

type: CommandType = CommandType.SET_PAYMENT_METHOD

payment_method: PaymentMethod

class CheckoutCommand(BaseModel):

type: CommandType = CommandType.CHECKOUT

Command = Union[AddItem, RemoveItem, SetDeliveryAddress, SetPaymentMethod, CheckoutCommand]

Events

class EventType(str, Enum):

ITEM_ADDED = “item_added”

ITEM_REMOVED = “item_removed”

DELIVERY_ADDRESS_SET = “delivery_address_set”

PAYMENT_METHOD_SET = “payment_method_set”

CHECKED_OUT = “checked_out”

class ItemAdded(BaseModel):

type: EventType = EventType.ITEM_ADDED

item: Item

class ItemRemoved(BaseModel):

type: EventType = EventType.ITEM_REMOVED

item_id: str

class DeliveryAddressSet(BaseModel):

type: EventType = EventType.DELIVERY_ADDRESS_SET

address: Address

class PaymentMethodSet(BaseModel):

type: EventType = EventType.PAYMENT_METHOD_SET

payment_method: PaymentMethod

class CheckedOut(BaseModel):

type: EventType = EventType.CHECKED_OUT

Event = Union[ItemAdded, ItemRemoved, DeliveryAddressSet, PaymentMethodSet, CheckedOut]

State and History

from pydantic import BaseModel

from typing import Union, List

from enum import Enum

class Item(BaseModel):

item_id: str

quantity: int

class Address(BaseModel):

street: str

city: str

zip_code: str

class PaymentMethodType(str, Enum):

CREDIT_CARD = “credit_card”

PAYPAL = “paypal”

class CreditCard(BaseModel):

type: PaymentMethodType = PaymentMethodType.CREDIT_CARD

card_number: str

class PayPal(BaseModel):

type: PaymentMethodType = PaymentMethodType.PAYPAL

email: str

PaymentMethod = Union[CreditCard, PayPal]

class RoleType(str, Enum):

USER = “user”

AGENT = “agent”

class User(BaseModel):

type: RoleType = RoleType.USER

class Agent(BaseModel):

type: RoleType = RoleType.AGENT

Role = Union[User, Agent]

class Message(BaseModel):

role: Role

text: str

class History(BaseModel):

messages: List[Message] = []

State Machine Logic

Enough with the boilerplate, here is the actual state machine logic:

@dataclass

class Checkout:

items: list[Item] = field(default_factory=list)

delivery_address: Address | None = None

payment_method: PaymentMethod | None = None

checked_out: bool = False

def execute(self, command: Command) -> list[Event]:

if isinstance(command, AddItem):

return [ItemAdded(command.item)]

elif isinstance(command, RemoveItem):

return [ItemRemoved(command.item_id)]

elif isinstance(command, SetDeliveryAddress):

return [DeliveryAddressSet(command.address)]

elif isinstance(command, SetPaymentMethod):

return [PaymentMethodSet(command.payment_method)]

elif isinstance(command, CheckoutCommand):

if self.can_checkout():

return [CheckedOut()]

else:

return []

else:

raise ValueError(”Unknown command”)

def apply(self, event: Event) -> None:

if isinstance(event, ItemAdded):

self.items.append(event.item)

elif isinstance(event, ItemRemoved):

self.items = [item for item in self.items if item.item_id != event.item_id]

elif isinstance(event, DeliveryAddressSet):

self.delivery_address = event.address

elif isinstance(event, PaymentMethodSet):

self.payment_method = event.payment_method

elif isinstance(event, CheckedOut):

self.checked_out = True

def can_checkout(self) -> bool:

return bool(self.items and self.delivery_address and self.payment_method and not self.checked_out)

def send_response(response: str) -> None:

# Implement this to send response back to user

print(”Agent:”, response)

Why does this matter?

In a complex system, it’s crucial to have a clear understanding of how state changes occur in response to events. This clarity helps in debugging, maintaining, and extending the system.

Strong guard rails

The agent is constrained to only execute certain actions, with known effects on the state. This reduces the risk of unexpected behavior.

The agent cannot “lose context” since we start owning the state machine.

What is the role of LLMs here?

LLMs, here, are used for implementing two specific functions, here in pseudocode type hints, where LLM[A] indicates a function that returns a value of type A by calling an LLM:

def parse_command_llm(user_message: str) -> LLM[Command]:

def generate_response_llm(state: Checkout, history: History) -> LLM[str]:

The first function translates natural language user messages into structured commands that the state machine can understand and process.

The second function generates human-readable responses based on the current state of the system and the history of interactions.

There is no other role for LLMs in this architecture. The state machine logic is entirely deterministic and does not involve any generative components.

Conclusion

This architecture provides a very robust framework for agents that require fine-grained control over state and actions. Here, the role of the LLM is limited to interpreting user intent and generating responses, that is, functions parse_command_llm and generate_response_llm. The state machine handles all the business logic and state transitions, ensuring that the system behaves predictably and reliably.

As bonus, the entire state of the agent can be reconstructed from the event log, enabling:

Serialization of the agent’s state for persistence or transmission.

Auditing of all actions taken by the agent.

Which are very important in production systems.