Building Validable, Complete Conversational Forms with AI Agents

Turning static forms into adaptive, auditable conversations.

In this project, we build an interactive, complete questionnaire system powered by an AI agent.

The agent talks with the user, fills structured data, validates it, and logs every step, bridging deterministic form logic with natural conversation. We keep the state explicitly outside the agent, so we have accurate control whether all data was collected and whether it is valid.

We present a minimal architecture where a language-model agent interacts with a structured schema, filling and validating fields through explicit tool calls. The process is deterministic, logged, and resumable, turning form-filling into a transparent dialogue between logic and language.

Background

Filling forms is a universal problem, from customer onboarding to compliance reports.

Yet, most forms are static: they don’t adapt, reason, or explain missing information.

By embedding the form’s structure into an agent runtime, we create a conversational process that is transparent, traceable, and interactive.

The AI becomes a guided interviewer rather than a passive input collector. It can aid the user in converting numeric figures, point possible input errors beyond validation (semantic errors), and more.

Motivation

Traditional form interfaces have fixed logic and no memory.

Agents, on the other hand, can:

Ask only for what’s missing, referring to the schema.

Validate fields as they’re entered, also referring to the schema.

Point possible errors beyond common validation.

Log each tool invocation (for auditability).

Keep track of the form state.

This pattern merges structured programming with autonomous agent reasoning, making workflows explainable and composable, as long as you can specify the data you must gather, you can merge two specifications.

Additionally, the agent might notice inconsistencies in provided data; such as an e-mail that includes a typo, with respect to the users last name, por example.

Objective

We should be able to:

Represent the form schema as structured data.

Track user progress and completion state.

Define callable tools for filling and checking fields.

Let an agent orchestrate the dialogue.

Produce a fully auditable completion log.

And with that, we can extend it to:

CRM qualification bots.

Compliance intake assistants.

Customer or employee onboarding flows.

Implementation

Steps

Define the schema (

Field,Group,Form).Create a state manager (

QualificationState).Expose tool functions for filling and validation.

Run an interactive agent loop.

Log and verify every step.

The schema

We model the form hierarchy with Python dataclasses. This is not exactly the agent state since it is immutable/read-only, this will hold the specification, listing which variables to collect, their types, their optionality, and how to group them; you could think in a production setting we would. support more complex data types, validations, groupings, repeating entries, etc.

from dataclasses import dataclass

@dataclass

class Field:

name: str

type: str

required: bool

@dataclass

class Group:

name: str

fields: list[Field]

@dataclass

class Form:

title: str

groups: list[Group]

We could add validation; while python's dataclasses can support validation hooks, Pydantic would make them easily presentable to an LLM via the model schema dumps in JSON format.

The form and qualification state

Tracks which fields are filled, checks completion, and logs actions.

The state itself consists of a Form, and its filled data as a dict[str, str].

@dataclass

class QualificationState:

form: Form

filled_data: dict[str, str]

A simple method allows to update fields. On the agent side, we will provide tools to also read up the current fiels.

def fill_field(self, field_name: str, value: str):

for group in self.form.groups:

for field in group.fields:

if field.name == field_name:

self.filled_data[field_name] = value

tool_logger.info(f”Field {field_name} filled with value {value}.”)

return

tool_logger.warning(f”Field {field_name} not found in the form.”)The form state can be checked to see what fields are missing, something that will be queried by the agent.

def check_completion(self) -> list[str]:

missing = []

for group in self.form.groups:

for field in group.fields:

if field.required and field.name not in self.filled_data:

missing.append(field.name)

self.is_complete = len(missing) == 0

return missingFor completeness, we point that here is where we would serialize the state of an agent so it can be started/resumed.

def save_state(self):

# Placeholder for saving state to a database or file

pass

Adding validation to different data types would naturally imply a function that returns errors, and could be used in place of returning only missing fields in check_completion. Probably I would rename it to validate_form_data.

Logging and setup

In case you need help setting up a nice logger, you can use the following code; other snippets in this post use this setup.

import logging

from logging import getLogger

tool_logger = getLogger(”tool_logger”)

tool_logger.setLevel(”INFO”)

tool_handler = logging.StreamHandler()

tool_handler.setFormatter(logging.Formatter(”%(asctime)s - %(message)s”))

tool_logger.addHandler(tool_handler)

Defining tools

Each tool is decorated with @function_tool, exposing deterministic APIs to the agent.

One tool writes to the form state:

@function_tool

def fill_qualification_form(group: str, field: str, value: str) -> str:

tool_logger.info(f”Filling field {field} in group {group} with value {value}.”)

qualification_state.fill_field(field, value)

return f”Field {field} in group {group} filled with value {value}.”A second tool can read the state; useful both in output and in updating values according to rules.

@function_tool

def read_filled_data() -> dict[str, str]:

tool_logger.info(”Reading filled data.”)

return qualification_state.filled_dataThe third rule provides feedback to the agent, so it can plan the next step.

@function_tool

def check_completion() -> list[str]:

tool_logger.info(”Checking for missing fields.”)

missing = qualification_state.check_completion()

if missing:

tool_logger.warning(f”Missing: {’, ‘.join(missing)}”)

return missing

The agent runtime

A loop drives the agent, which continues to interact with the user until the form data is complete and valid. This is a very standard loop, where I used the responses API to make conversation state less verbose to maintain in this demo.

agent = Agent(

name=”QualificationAgent”,

instructions=instructions,

model=”gpt-4o”,

tools=[fill_qualification_form, check_completion, missing, read_filled_data],

)

previous_response_id: str | None = None

while not qualification_state.is_complete:

user_input = input(”User: “)

response = Runner.run_sync(

starting_agent=agent,

input=user_input,

previous_response_id=previous_response_id,

)

previous_response_id = response.last_response_id

print(response.final_output_as(str))

Once all required fields are filled, the agent announces completion and prints the final dataset.

The instructions, specifically, are assembled from data. They read in the form specification, and then instruct the model on the tools it can use. That said, remember the loops termination condition is related not to a number of tool calls or interactions; it will stop only when the data has been collected.

instructions = (

f”Please help the user fill out the following form:\n”

f”Title: {qualification_form.title}\n”

)

# groups

for group in qualification_form.groups:

instructions += f”Group: {group.name}\n”

for field in group.fields:

req = “Required” if field.required else “Optional”

instructions += f”- {field.name} ({field.type}, {req})\n”

instructions += (

“Use the provided tools to fill fields, check for errors, and identify missing required fields.”

“Proceed by asking the user for the necessary information. Fill it”

“using the fill_qualification_form tool. After each input, check for errors and missing fields.”

“Use the `check_errors` and `missing` tools as needed.”

“If the input looks weird; ask the user to clarify.”

“Then confirme before moving on to the next field.”

“Look for possible typos and suggest corrections.”

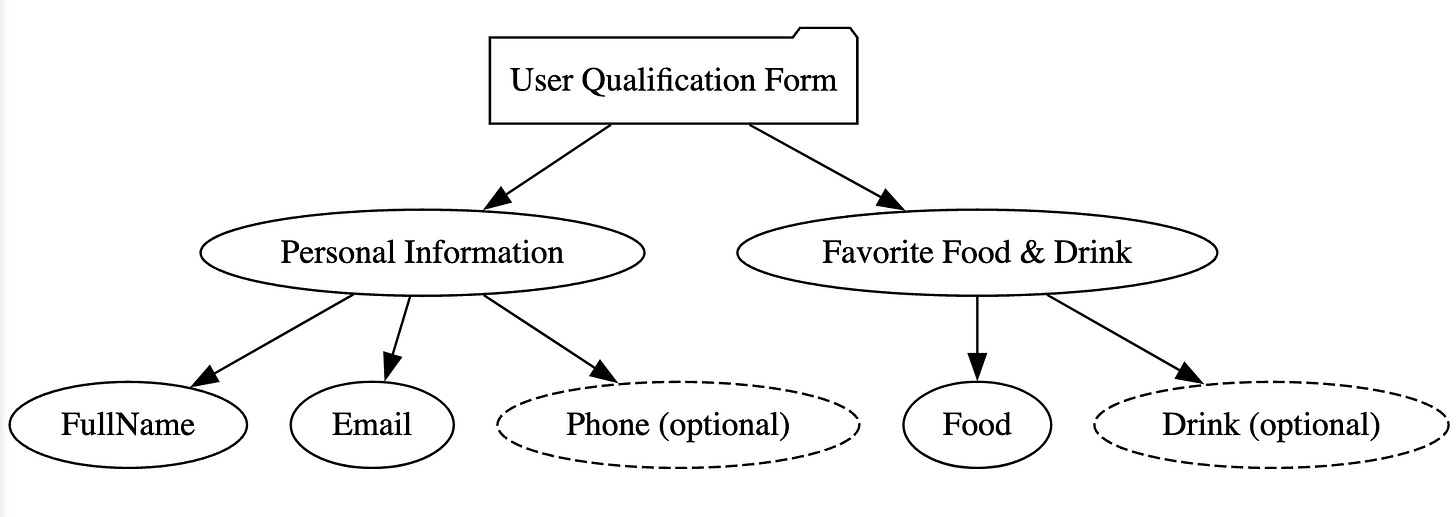

)Visualizing the form

Visually, the form schema can be represented as:

A form will be seen by the agent as a graph of dependencies it must satisfy. This structure mirrors the conversational hierarchy the agent follows during dialogue. It will work in blocks, and go over each data it needs to capture.

Transcript, example

The following transcript shows a short interaction between the agent and a user, demonstrating live field validation and completion.

Agent: Hello! Let’s get started. What is your full name?

User: My name is Vinnie Colaiuta.

2025-10-13 23:02 — Field “Full Name” filled with value “Vinnie Colaiuta.”

Agent: Great, Vinnie. What’s your favorite food?

User: My favorite food is sushi.

2025-10-13 23:03 — Field “Favorite Food” filled with value “sushi.”

Agent: Thanks! I still need your email address to complete the form.

User: My email is vinnie_c@gmail.com.

2025-10-13 23:04 — Field “Email Address” filled with value “vinnie_c@gmail.com.”

When all required fields are filled:

Agent: Perfect. All required fields are now complete.

Form submission successful.

Filled Data:

Full Name: Vinnie Colaiuta

Email Address: vinnie_c@gmail.com

Favorite Food: sushi

Conclusion

By structuring the forms, data and collection as part of a class, we could get an LLM to use tools to call class methods on the form class.

Then, the LLM could drive the conversation to fill the form, check for errors, and confirm completion.

Other takeaways:

Keeping an instance across calls allowed us to keep state, althought of course that could have queried data given an external database or API/instance ID.

The qualification state class via its

check_completionmethod helps the LLM understand what is missing.If the check_completion method returned no missing fields, the LLM could decide to end the conversation, and at this point the form and answers and data should be complete and saved.

That’s it folks, thanks for reading!